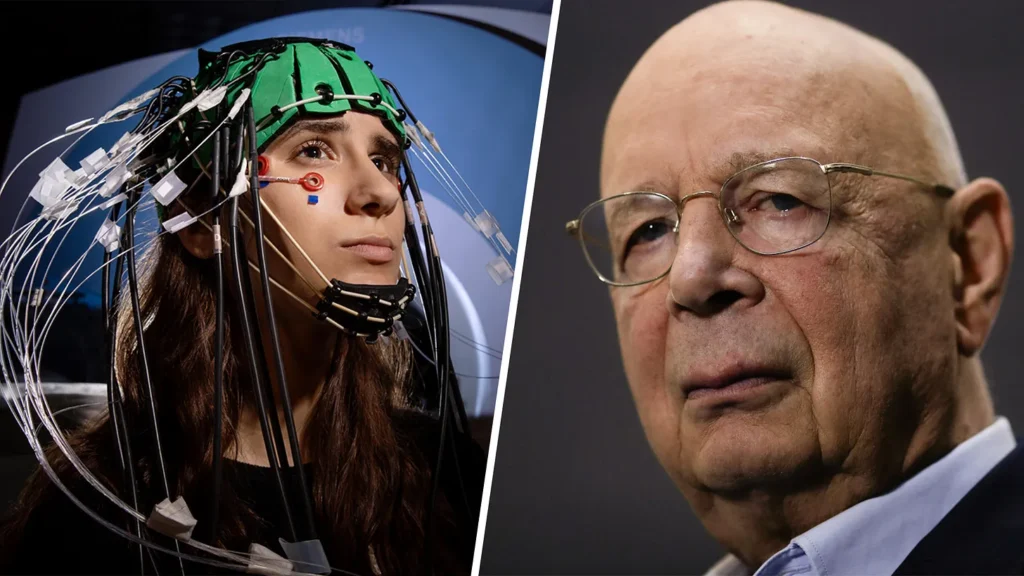

Emerging technologies now have the capability to decode people’s thoughts, interpret their emotions, and even generate images from their brain activity. While such advancements could revolutionize medicine and communication, concerns are growing over how they might be used—particularly by governments and corporations.

The World Economic Forum (WEF) has explored the idea of integrating neurotechnology into workplaces and governance, raising alarms about privacy, autonomy, and potential misuse.

How Thought Monitoring Works

Recent breakthroughs in brain-computer interfaces (BCIs) and AI-driven neural decoding allow machines to:

- Translate brain signals into text or images

- Detect emotions in real time

- Predict intentions before actions are taken

Companies like Neuralink (Elon Musk’s venture) and research institutions are already testing these technologies for medical applications, such as helping paralyzed patients communicate. However, the WEF has discussed expanding their use into surveillance, workforce management, and even law enforcement.

Why This Is Controversial

Critics warn that allowing governments or employers to access mental data could lead to:

- Mass thought surveillance

- Workplace discrimination based on emotional states

- Manipulation through neuro-targeted propaganda

- Erosion of free will and cognitive liberty

A WEF report has suggested that such tools could help “improve productivity” by monitoring employee focus and stress levels. But civil liberties advocates argue this crosses an ethical red line, turning minds into another data stream for corporations and states to exploit.

The Fight for “Neuro-Rights”

Some countries, like Chile, have already begun legislating “neurorights” to protect mental privacy. Meanwhile, technologists and ethicists are calling for strict regulations before thought-reading tech becomes mainstream.

As neurotechnology advances, the debate intensifies: Should our thoughts remain private, or will they become just another metric for governments and employers to track?

The answer could determine whether future societies respect mental freedom—or normalize thought surveillance as part of daily life.